BLOG

10 Things We have Learned About Building Complex Multi-Topic Agentforce Agents

Written by Dave McCall, VP, Innovation at Thunder

In my 25 years of technology implementation, generative AI is like no technology I have used before. Moving from deterministic development (writing code or building flows) to using genAI has forced my team and me to create a whole new set of best practices. Agentforce provides a framework for building agents that offers some structure that other approaches do not, but there is still much to learn in order to build your first complex agent. Having worked with a few dozen customers to implement Agentforce, our team has learned a number of valuable lessons. We’re developing a set of best practices for delivering agents and today I’d like to show you some of what we’ve learned.

The Smart Intern

To start, I want to offer a different way of thinking about Agentforce. As I said before, Generative AI isn’t deterministic, like almost all software before it. The same inputs often provide slightly different results. At the same time, why would you ever expect the same inputs–because the “input” to an agent is every single word in the entire conversation.

We need to think about an agent like a smart intern. It understands language, it has general knowledge, and it is eager to talk. If I were to hire a smart intern tomorrow, I would give her a small set of topics to start with. I would try to provide her with thorough enough instructions. I would provide her access to the right systems to perform any actions she needs in order to get or set data. I wouldn’t expect her to do things correctly if her instructions were unclear or incomplete. I wouldn’t expect her to know anything about data to which I hadn’t provided her access.

The smart intern approach helps us think about our agent correctly. It helps guide how we instruct it, how we test it, and our expectations for how it will perform.

An agent is better than a smart intern in a few ways. An agent will follow your instructions every time; unlike an intern who is sometimes distracted or thinks they have a better way. An agent will look up the answers every time; unlike a smart intern who is pretty sure that they know the answer and doesn’t feel like looking to see if it has changed. An agent is very good at processing through large amounts of data, even unstructured data, and synthesizing an answer from it. An agent can perform an almost unlimited number of tasks in parallel; unlike an intern who isn’t as capable of multi-tasking as she thinks she is.

An agent has some flaws. Your agent will follow your instructions every time; unlike your smart intern who might sense that their instructions are wrong from the customer’s reaction. If your agent doesn’t have access to the right data to do the task they won’t ask for what they need. And most importantly, if your agent is getting things wrong they can make more mistakes faster than a smart intern.

| Smart Intern | Agent | |||

| ❌ | Will follow your instructions until they get distracted or think they have a better way | ✅ | Will always follow your instructions | |

| ❌ | Looks up the answer unless they think they already know it | ✅ | Looks up the answer every time and therefore always gets the latest | |

| ❌ | Is overwhelmed by large amounts of data | ✅ | Is good at quickly synthesizing large amounts of data | |

| ❌ | Performs a handful of tasks in parallel | ✅ | Can perform almost unlimited tasks in parallel | |

| ✅ | Will question their instructions if they get bad reactions or results | ❌ | Will not question their instructions | |

| ✅ | Will ask for access to data they need to provide thorough answers | ❌ | Performs as if the data they have been provided is all the data that exists | |

| ✅ | Because they handle only a handful of tasks, if they are getting things wrong they do it slow | ❌ | Because they handle lots of tasks in parallel, they can get a lot wrong quickly | |

Let’s take a look at how this idea plays into a working agent. I’m going to show you a demonstration agent we have built for hospitality–helping hotels, resorts, restaurant groups, and cruise lines to provide rich experiences to their guests and empower employees to do the same.

To start, please imagine with me that the user, Sophia, has made a reservation at our luxury resort, Thunder Bay. She wants to learn more about her stay and attempt a few transactions. She is going to use the Thunder Bay-gent to try to get help. I’m going to use this agent to illustrate each of the tips I share with you today. agent.

I have 10 tips for you, so let’s get right to it. Here are 10 things we at Thunder have learned about building complex agents.

1) Teach your agent to tell the user what it can do

Confession time. Every time Salesforce’s marketing team posts anything about an agent providing massive efficiency gains for a customer, I work to find that agent on that company’s website and try it. I want to see how it was designed to solve problems. I want to try the use cases. It is often terribly difficult to figure out what I’m supposed to say to that agent to get it to do its thing.

This is a problem with all autonomous agents. When you go to a web site and browse around, it is very clear what information you can find. You can quickly look at menus to see what information it offers. You can generally look at navigation menus in web applications to see what functions are available. Not so with conversational agents. It can be very frustrating to a customer to be presented with an agent that performs 2 of the almost infinite tasks users might have come to have completed.

By default, the behind-the-scenes Salesforce instructions won’t let an agent tell the user about its topics and instructions. There are very good security reasons for this. So, we believe that the best thing you can do is provide the agent with a curated answer when someone asks any derivation of “with what kinds of things can you help me?” So, we intend to bake that into every agent we build for clients.

This seems like a good place to start with our agent.

2) A knowledge agent is a good starting point, just make sure you have good knowledge

Likely the best way to start building an agent is with a straight-forward question and answer agent. Agentforce uses knowledge differently than Einstein bots in that it is smarter about pulling just pieces of information out of any kind of knowledge–whether that be articles stored in Salesforce or collections of information stored elsewhere.

Generative AI understands knowledge like a human does. It understands headings, labels, and tables. The easiest input for an agent are name/value pairs. First name colon Dave. Last name colon McCall. Height colon six apostrophe one double quote. Weight colon…never mind.

Your goal is to create a comprehensive, human-readable knowledge base (if you haven’t already). The more information it contains and the better it is organized, the more accurately your agent will respond to questions. A little structure goes a long way.

This is the article that was used to synthesize the answer. Note how the agent has pulled the information from a small section of the article.

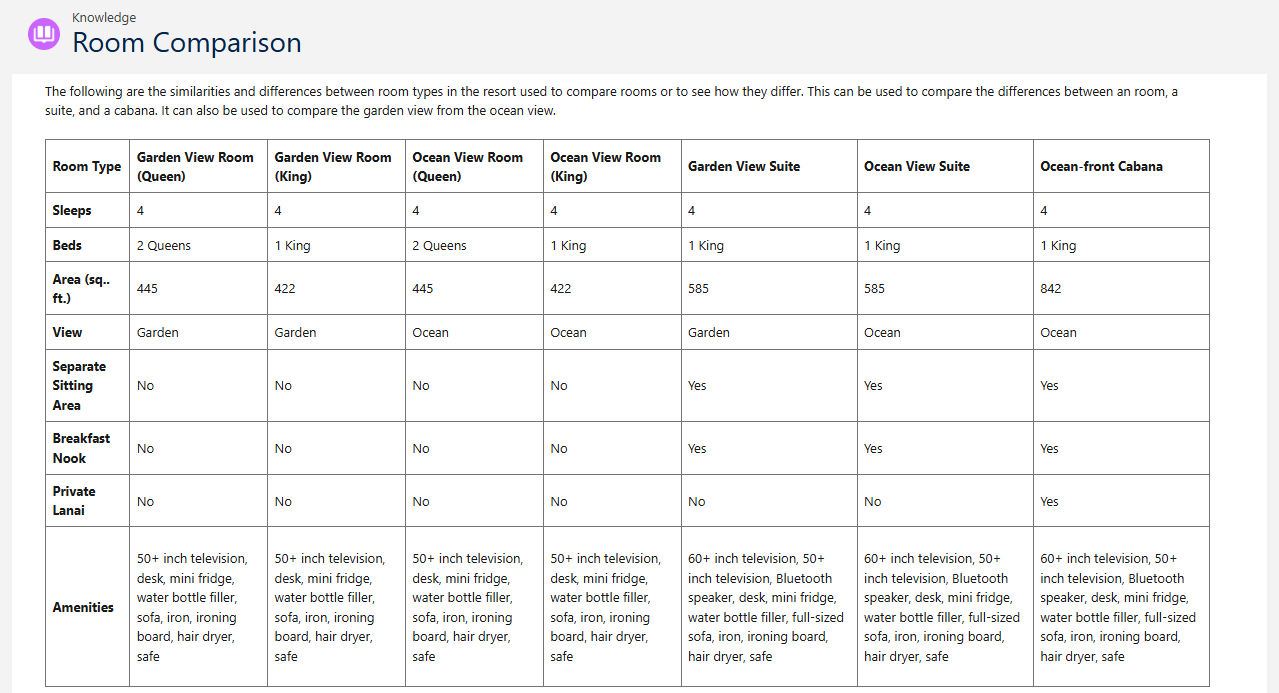

This is the article that was used to synthesize the answer. Note how the agent “understands” the data table in this article. Its instruction tells it to compare rooms by just telling the user the differences.

3) A good agent personalizes whenever possible

A question and answer agent is a good first step, but your users will want more. The right next step is an agent that does some amount of retrieval to personalize responses. Agentic AI is best when it is synthesizing information–combining information to provide answers. When we bring in the user’s personal data, it gives the agent the chance to synthesize answers.

Here, you can see that when I ask the agent about loyalty, it tells me about the customer’s loyalty status and points.

I can ask a generic question and get back information directly from the knowledge base, but when I ask about the tier, it will tell me about my tier. When I ask what I can do with my points, the agent will choose something from a category that makes sense according to the number of points I have.

You might have also noticed how the agent is using some of our company’s brand vernacular to respond. In our case, it uses a little Hawaiian to respond. This also personalizes the agent.

4) A good agent does things

No one calls your contact center to just ask about your return policy. If they ask about your return policy, they want to know if the item they have is something they can return and if it is, they want to initiate the return process. If you find that you’re not getting the value out of an agent that just tells people about your return policy…of course you aren’t.

If lots of your customers were asking your smart intern about making returns and all they said was “it looks like you’re eligible to make a return” but then had to pass the customer to someone else to start the process, it wouldn’t be a great experience. In short order you’d be teaching that intern to process returns or finding something else for them to do.

Note that there might be complex computation or actions executing behind the scenes, perhaps integrations to other systems. That’s part of the joy of the agent. The agent doesn’t need to know everything the action does.

5) Keeping Topics Differentiated is One of the Hard Parts

This is going to be one of the hardest parts of building a complex agent that handles multiple types of requests.

To illustrate, imagine you have an agent for a jewelry retailer. That jewelry retailer has different processes for handling traditional jewelry and watches. It would be awkward if your agent didn’t know what someone meant when they said “I need to schedule a repair for my Rolex” or Patek or JLC. Every person at the jewelry retailer knows those are all watches. When building your agent, you might create two different topics, one for jewelry repair and one for watches, but your agent might have difficulty differentiating the two without complete knowledge of the brands you sell. You’d be forced to try to put all those brands in the instructions.

Unless…you don’t sort it out. You don’t have two different topics. You have a single repair topic and if the agent has to do different things, you work that out in the instructions and actions behind the topic. Perhaps a jewelry repair requires the agent to open a case and a watch repair requires an api callout to your watch repair partner, you simply handle that by giving the agent the instructions and information it needs in a single repair topic. To the agent (and the customer) this is seamless.

Let me show you how this works with our agent. In this case, we tried to have two separate topics–one for standard special requests on the reservation and one for every other kind of request. To try to make that work, we tried to put all the standard requests in the topic description. That was a mistake. Instead, let me show you what we did.

As you can see, where the topic is chosen based on its classification, the actions are selected based on a more robust set of instructions. By adding an action that queries the list of “special” requests, our hospitality agent has exactly what it needs to determine when a case is necessary or when a simple record update will accomplish the task.

6) Don’t build like an Einstein bot

An agent is so much better than a predictive bot (like an Einstein chat bot) because of context and the ability to switch between “modes” or even synthesize modes. You need to test it like a human. Generative AI is less predictable, but understands a lot better. We have to treat it more like a human than like an algorithm–in both the positive and negative senses. Back to my smart intern.

A smart intern will understand if the user said “I need my Rolex fixed” and then followed up with “how much will it cost for that”. Our agent understands that “that” refers to the Rolex repair, but you do need to test that. Don’t be too specific. Talk like people talk. Make sure it understands. You wouldn’t fire your intern if they didn’t get everything right, you shouldn’t expect more from your agent than your smart intern. When your agent makes a mistake, it’s most likely not an issue of competency, it’s an issue of clear and sufficient instructions.

Note here that the user asked whether there was pickleball or not, but in the second input from the user, simply asks to reserve “a court”. These are the places that generative AI feels both magical and blasé at the same time. Any human would understand that, but before generative AI that would have been much more complicated to handle.

Unfortunately, the same technology that makes that work also makes it a lot harder to predict, so you will need to test every use case much more thoroughly.

7) Your agent does not learn, it follows instructions

I confirmed with the Salesforce product team. Agentforce agents don’t learn. This comes up a lot when people consider whether to build in a sandbox or production (correct answer: sandbox). When you hit thumbs up or down in Agent Builder that feedback gets logged into an object but it isn’t used to inform subsequent responses. What does this mean? Instructions and actions are our primary tools for fine-tuning agents, so it is essential to continue to hone them until they are robust enough to handle every edge case. It means that you have to get your instructions and actions right.

So bake your “learning” into the actions.

In this case, we have used machine learning to predict what people might like. So the learning is separate from Agentforce. It could be done more simply by tracking the popularity of different experiences and sorting based on that. Simply put, if you want your agent to learn, you need to implement the teacher.

8) Longer instructions are better than lots of short ones.

There are complex generative AI reasons why that I’m not sure that I could explain right, but our trial and error says it’s true. In fact, an agent seems to like run-on sentences (even if Mrs. Friedman, my high school English teacher, didn’t).

Also note that an agent follows all of your instructions at once. Just because an instruction is first in the interface, it doesn’t mean anything to the agent. If it needs to do things in an order, tell it what order to do things. Be careful that your instructions don’t conflict with each other. Like a smart intern, this will just cause confusion and mistakes.

9) Give it positive instructions whenever possible

I have been married for 25 years. Here’s some good marriage advice. Tell your partner what you appreciate way more often than what you don’t. Positive messages are easier for people to act upon. Somehow the same is true about agents. They do well when you tell them a lot more about what you want them to do and a little about what not to do. Your agent is looking for a direction to run. If you point them in the right direction, they can run for miles, but if you tell them to not go in a direction they might run in any other direction.

Unlike in your marriage, with an agent you should use “always” and “never” statements.

In the case of our agent, we don’t ever give a customer reservation or experience information without confirming their email address–always.

- Estimate based on the actions, not the instructions

Estimating how long an Agentforce project will take was very difficult at the start. We didn’t know how to determine what was going to take the time—it’s the actions.

Actions take all of the work—especially integration actions. Instructions take some nuance and trial-and-error, but actions need to perform well.

In the end, building complex, multi-topic AI agents is an iterative process. By thinking of your agent as a smart intern, focusing on clear instructions, and prioritizing robust actions, you can create powerful tools to enhance user experiences and streamline operations.

Remember that refining the actions behind your agent is often where the bulk of the effort lies. These 10 key learnings from our experience at Thunder are just a starting point. Agentforce is rapidly evolving, so continuous learning and adaptation are essential. We encourage you to take these insights, experiment, and build great agents.

Agentforce for Your Organization

How can I benefit from Agentforce? The autonomous workforce of the future is compelling. However, applying these tools to every single use case will yield an array of diminishing returns. Our goal is not only to build great agents, but to build agents that accomplish great tasks and add substantive value to their organizations.

Where there are man-hours, there is an opportunity for Agentforce. Isolating use cases and business processes that rely on a large amount of manual work and/or reasoning set the foundation for a compelling Agentforce implementation. If the business process is well defined, the actions are digital, and the end user would prefer expediency over personal interaction, this is the trail you want to go down when looking for an Agentforce application.

Additionally, where there is an abundance of unstructured data—pdfs, knowledge articles, and/or meeting transcripts—leveraging the power of Data Cloud, Agentforce can step in and take action on this data. If it currently requires trained eyes, first-hand knowledge, or organizational wherewithal to extract what is needed from the data, an Agent with access will save time and effort parsing an organization’s archives.

A common pitfall is to attempt to apply Agentforce broadly for its own sake, resulting in low ROI and difficult to calculate adoption. This more targeted approach might not encompass all that Agentforce can do for an organization, but it will provide a starting point and open the doors to new possibilities and compelling improvements to productivity.

Dave McCall

Dave has been working implementing technology for 25+ years. He leads innovation at Thunder which keeps him experimenting with the latest technology. He’s part of the team at Thunder that is evolving a set of best practices for delivering implementations of Salesforce products and features. He lives in Chicago with his wife, three children and a very energetic but not-so-smart dog.